System Architecture

From athena

The Athena cluster is comprised of 140 nodes linked together by a high speed InfiniBand network fabric. There is also a front-end node (athena0) for user login, compilation, and job submission. The compute nodes are accessed via batch jobs submitted to PBS.

Contents |

[edit] Compute Node Configuration

Athena compute nodes are divided into two phases: Phase I nodes are the 128 original nodes, Phase II nodes consist of 12 new nodes about 1.5 years later.

Each of the 128 Phase I compute nodes contains 2 quad-core Intel 2.33 GHz E5345 Xeon processors, meaning that there are a total of 1024 cores available for computation. The E5345 is an 80W, 65nm process, socket LGA771 chip with a bus speed 1.333 GHz. Each pair of cores shares 4MB of L2 cache clocked at 2.33 GHz. For more information, see the Intel spec page for the E5345. Each compute node contains 8GB of RAM (or 1 GB per core).

VPL has added 12 more compute nodes, known as "Phase II" nodes, each of which are equipped with 2 top-of-the-line quad-core Intel 3.16 GHz X5460 Xeon processors. These use the newer 45nm fabrication process and have 6MB of L2 cache per core-pair (compared with the E5345s which have 4MB). For more information, see http://processorfinder.intel.com/details.aspx?sSpec=SLANP the Intel spec page for the X5640]. Each of the new VPL nodes has 16GB of RAM, or 2 GB per core.

All of the compute nodes currently run CentOS 4.5.

[edit] Network

The compute nodes are connected to one another via InfiniBand. The principal advantages of InfiniBand are low latency and high bandwidth. Network latency is a measure of the typical time required to send a message from one node to another. On InfiniBand, the latency is a few microseconds. This is more than 20 times faster than Gigabit ethernet, which is over 60 microseconds. Our current InfiniBand configuration supports a theoretical maximum bandwidth of 16 Gb/s (4xQDR). Not only is this 16 times the theoretical maximum of Gigabit ethernet, in practice it is possible to actually attain a much larger fraction of InfiniBand's theoretical bandwidth than Gigabit. Therefore, one usually sees over 30 times greater bandwidth using this configuration of InfiniBand vs. Gigabit.

[edit] Storage Configuration

Athena has two principle storage components: "shared storage" and "locally-attached disk."

[edit]

The storage system that completely satisfies 95% of users' needs is the shared-storage system. All of the filesystems mounted in the /share directory are physically resident on the same shared-storage product called "PolyServe" that is currently sold by HP. The allocations and purpose of each filesystem are described in detail in Disk Space and Node Allocation. We briefly list them here:

/share/home # Users' home directories /share/data1 # FiberChannel 6.3TB /share/scratch1 # FiberChannel 1.6TB /share/scratch2 # FiberChannel 1.6TB /share/sdata1 # SATA 11TB /share/sdata2 # SATA 2.7TB

PolyServe functions as a "network-attached storage" (NAS) system wherein all of the compute nodes NFS mount the PolyServe filesystems. They do so over the InfiniBand network. This means that the connection to the PolyServe hardware is not only fast, it is actually faster than the connection to a node's local disk. Consequently, the PolyServe array normally provides superior I/O performance in addition to offering the convenience of being accessible from every node on Athena.

Nonetheless, there a number of things to be aware of when trying to get the best performance out of our PolyServe NAS.

A discussion on how to best use shared-storage products like PolyServe and when to use locally-attached disk is given in optimizing Athena's I/O performance.

[edit] Locally-attached disk

Each compute node also has 123GB of locally attached SAS storage in /state/partition1. We encourage people to only use locally-attached disk when necessary and to please clean up after themselves. Files on any of the locally-attached disks are subject to deletion at any time.

[edit] Performance

Each Phase I 2.33 GHz core is capable of 4 floating point operations per clock cycle. Therefore, the maximum performance possible on the 128 original Phase I 2.33 GHz cores is as follows:

- 9.32 GFlop/s per core

- 37.28 GFlop/s per socket (4 cores/socket)

- 74.56 GFlop/s per node (2 sockets/node)

- 9.544 TFlop/s for all 128 original nodes

Each Phase II 3.16 GHz core is 1.36 times faster than the 2.33 GHz ones:

- 12.72 GFlop/s per core

- 50.90 GFlop/s per socket (4 cores/socket)

- 101.80 GFlop/s per node (2 sockets/node)

- 1.221 TFlop/s for all 12 upgrade nodes

- 10.765 TFlop/s for all 140 compute nodes

In reality, you are doing really good if you manage to get about 30% of this. Most untuned applications can expect to get in the neighborhood of 10% of peak at best.

[edit] Linpack Benchmarks

For Linpack performance measurements, Rpeak is the theoretical maximum number of floating point operations, in our case 9.544 TFlop/s for the 128 original compute nodes. Rmax is the maximum speed attained by the benchmark code.

| Cores | Rmax(GFlop/s) | Rpeak(GFlop/s) | % of peak |

|---|---|---|---|

| 1016 | 4518.3 | 9469.1 | 47.7% |

| 880 | 3968.9 | 8201.6 | 48.4% |

| 32 | 200.3 | 298.2 | 67.2% |

[edit] I/O Performance

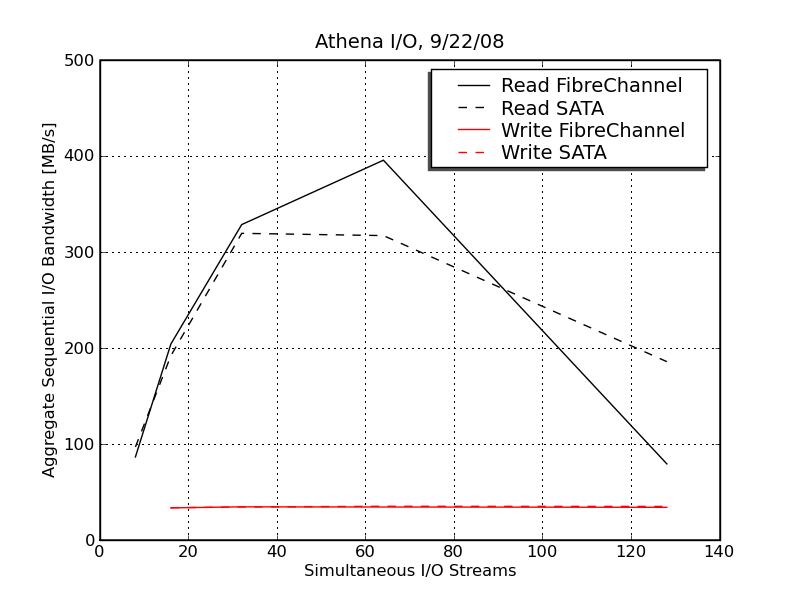

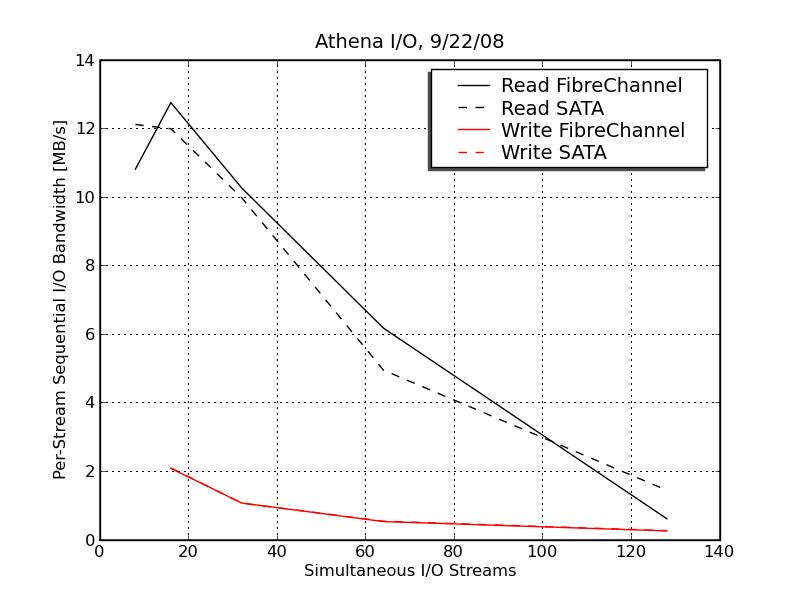

The Polyserve SAN has very different read and write characteristics. From the two figures below, we see that read performance, while it does not scale linearly with the number of I/O streams, does continue to grow quite well to around 64 read streams. After that, it degrades. For small numbers of streams, it looks like there is little difference between FibreChannel and SATA arrays for sequential I/O. Interestingly, you get a bit more out of the FibreChannel disks at 64 streams, but then the SATA array takes over for higher numbers.

The test that was performed was a simultaneous read/write with 1 I/O stream per core. All streams read/write from/to the same file, but each from/to a unique contiguous block within that file.

Write behavior is a little more finicky when using Polyserve. The write speed of most parallel filesystems is limited by their ability to update metadata (e.g. filenames, permissions, locations, etc). This is because the metadata must be constantly sychronized across all of the filesystem's service nodes (this is different file the file data itself, for which the filesystem does not need to maintain a fully coherent view). For the write test illustrated below, all streams writing to a single file trips the metadata bottleneck, and write performance is a constant ~43 MB/s no matter how many streams you have open and no matter which partition you use. The lesson here is that to get good read performance out of the Polyserve system, each stream must write to it's own file in its own subdirectory. For more discussion on this, see the section on optimizing Athena's I/O performance.

For comparison, 400MB/s is roughly what the Cray XT3 Lustre filesystem at PSC can sustain on >~ 50 cores. Not bad, considering the storage system alone for that machine is in the million dollar range. Of course, it also sustains similar throughput for writes to the same directory, and it does not degrade as the number of streams increases beyond ~60.